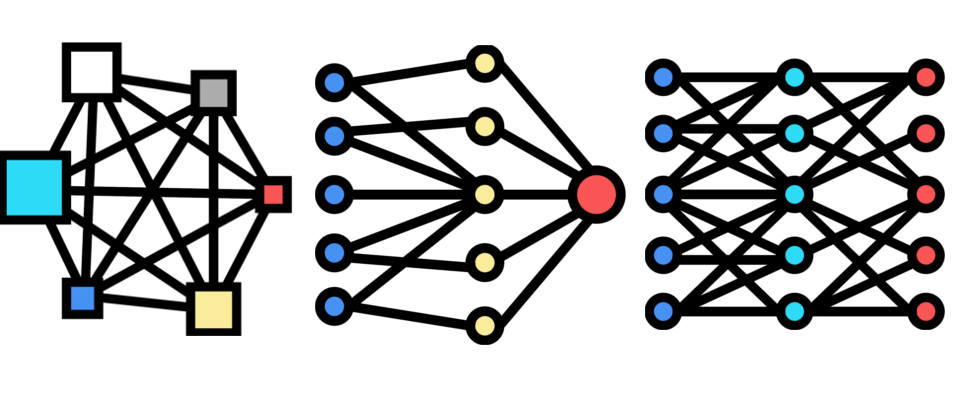

Deep learning is the technology driving many of the recent breakthroughs in artificial intelligence (AI). In most cases, such an AI is an app that has learned some skills like feature detection from an annotated dataset during extensive training cycles, which is called machine learning. Thereby, the hope is that it will apply the acquired knowledge correctly to process new data. Of course, you can build such an AI in many different ways. Still, the most successful approach is artificial neural networks, structures inspired by the human brain, consisting of many different layers of programmatic neurons that can connect in different ways.

The way these layers are connected defines how the network processes input data. Thus, learning in simplified words is to find the network configuration that solves a particular problem best. The adjective "deep" says that these networks are large and contain many layers. Deep learning frameworks represent an operating system for AI upon which specific apps, also called models, are developed and trained. The profession responsible for training these models is called machine learning engineer and is becoming increasingly popular.

Everybody familiar with AI and deep learning knows TensorFlow and PyTorch, two remarkable frameworks for machine learning developed by the US tech giants Google and Facebook.

However, a less known third player from the far East called PaddlePaddle clandestinely started to shake up the field. The Chinese search-engine operator Baidu initially released the toolkit under an open-source license in 2016. The name is an acronym for PArallel Distributed Deep LEarning, and this is precisely what PaddlePaddle offers:

The framework sets new standards for ultra-large-scale deep learning and facilitates parallel training with hundreds of billions of parameters involving hundreds of nodes. In the past, models have been mainly trained on single or few nodes. As a result, the framework became the most scalable machine learning platform in the market, but of course, others have been catching up in the meantime.

Graph Learning and Quantum Neural Networks

But this is by far not the only innovation: Graph learning, a front-edge technology in machine learning, plays a vital role in PaddlePaddle. Network Graphs are simply diagrams that show the interconnections between a set of different entities in a network. The goal in graph learning is to extract relevant features of such network graphs with machine learning algorithms. The graphs can represent social networks, knowledge graphs, transport networks, among others. It is not surprising that also in this domain, neural networks, so-called Graph Neural Networks (GNNs), play a dominant role. A typical application of these GNNs is link prediction, where you try to predict the probability the two network nodes establish a link.

Predicting a link can be used for building a recommendation system proposing products or even new digital friendships in a social network. But of course, there are many other fields where they can apply text classification, traffic forecasting, or modeling the spread of diseases like covid are only some of which. Since this type of network is completely new, much research is going on in this field. The Paddle Graph Learning (PGL) toolkit provides all features to explore this new technology out of the box.

But this one is even more science fiction: At Baidu's "Wave Summit 2020" deep learning developer conference, the brand-new quantum machine learning development kit Paddle Quantum (Paddle Quantum) entered the stage. Duan Runyao, head of the Quantum Computing Institute of the Baidu Research Institute, announced it proudly to be the first deep learning framework in China to support quantum machine learning. He is convinced that there is an entangled and inseparable relationship between artificial intelligence and quantum computing and intends to build a bridge between the two fields.

Paddle quantum provides a framework to construct and train quantum neural networks (QNNs), including easy-to-use quantum machine learning (QML) development kits supporting the technology of combinatorial optimization, quantum chemistry, and other cutting-edge quantum applications on standard hardware.

The package also provides a quantum encoder to transform classical information into quantum states. Thus, it plays a crucial role in using quantum algorithms to solve classical problems, especially in quantum machine learning tasks. Currently, there aren't many real-world applications of this hardly two years old technology. However, in the future, it will undoubtedly open a stunning perspective and revolutionize the way AI and computing work.

Sounds all like science fiction? Apart from these ambitious lighthouse projects, the framework is already coining the presence with a solid toolkit of well-established AI key technologies for all industrial needs. The developer and user community of PaddlePaddle has been growing exponentially during the last years and already includes almost 85 000 companies and nearly 2 million active developers. This lead to more than 200 000 models having been trained using this framework. Also, global tech companies like Intel, Nvidia, and Huawei, started supporting the framework recently.

PaddlePaddle in Action

The adaption of the framework in China is advancing quite rapidly, and AI applications already play an important role in everyday life. PaddlePaddle-based AI even played some role during Chinas fight against the COVID 19 Pandemic at three different front lines:

In diagnostics, CT scans are an essential tool to diagnose pneumonia signs, which leads to severe covid cases—detecting these signs of pneumonia soon after the infections increases the chances of successful treatment. Furthermore, it helps predict who will need one of the rare intense care units and who won't.

As soon as early 2020, the company LinkinMed released an AI-powered pneumonia screening platform to support diagnostics at Xiangnan University. The platform reached a detection accuracy of 92%.

Also, tracking the way the Coronavirus spreads and predicting the future path of the pandemic is a field where AI can play an essential role in defeating this or any future pandemic. For example, just by monitoring search queries and social network conversations, you might be able to detect a new outbreak in its early stages and therefore contain it more efficiently. When people are in some regions are starting to search for medication against fever in some regions, this could indicate there is an outbreak. Combining this information with travel data can give real-time insight into the dynamics of a pandemic and can make it possible to predict future virus outbreaks similar to the weather forecast.

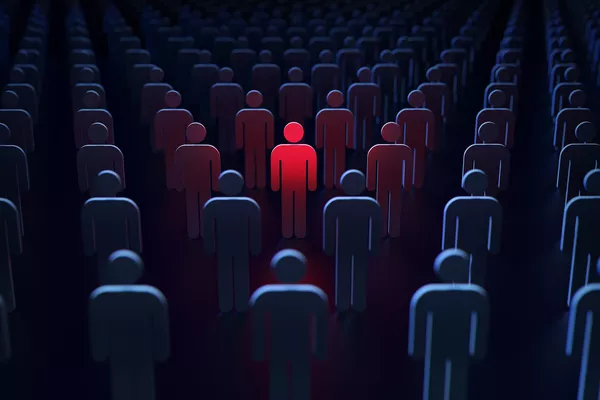

The next topic is a critically acclaimed use-case of AI: China established a strict mask mandate for its citizens in Corona hot spots. It did not take long until AI could automatically supervise this mask mandate and spot people without masks in the street. The open-source model provided by Baidu reached a classification accuracy of 97.27% with a robust performance in long-tail scenarios and could detect if individuals in a crowd of people are wearing their masks.

The use of AI and deep learning in supervision is not limited to masks. The Chinese authorities have been ramping up something called the "Skynet" system, the most extensive surveillance infrastructure in the world. According to Chinese media, this system is heavily utilizing facial recognition technology and big data analysis. And it is not too hard to guess which deep learning framework is one of the leading players in this game. The mega-cities Chongqing, Shenzhen, and Shanghai are considered the world's top 3 supervised cities in the world. Apparently, in Shanghai, it is possible to identify a person in the streets just within seconds.

Officially the surveillance system is only used to fight criminals. Still, it is not hard to imagine that authorities could easily use this powerful tool to supervise and suppress political opponents. George Orwell's prediction from his visionary book 1984 is becoming a reality in China at high speed currently, with the heavy support of deep learning frameworks like PaddlePaddle. Big brother is watching you, equipped with the power of highly parallel AI. Quite scary. The question if this state is desirable each society hast to answer for itself.

It is the old dilemma of humanity in the time of technological breakthroughs showing up again and again: Applications that change our daily lives for the better, more than we could have imagined in our wildest dreams. But one could also use it to ramp up the most sophisticated system of supervision humanity has ever seen. The only possible answer here is that technology is neither good nor evil – it is just what we make out of it.

Got interested and want to start making the world a better place with AI immediately or just building your own super-smart supervision system right now? Here is what ready-to-use key technologies the PaddlePaddle framework offers in more detail. To get started with training and deploying your first AI apps, all you need to know is some Python. However, if you want to dig deeper into the core framework, some C++ is certainly useful since the latter is implemented in C++, focusing entirely on performance.

The good news is that many of the AIs are already pre-trained and just wait for being explored: The framework offers a powerful suite of more than 130 official models from the four main categories Computer Vision (CV), Natural Language Processing (NLP), speech recognition, and recommendation systems.

Computer Vision with PaddleCV

Among its most elaborated and used features, there are computer vision and natural language processing. For example, computer vision plays a crucial role in autonomous vehicles or drone navigation. Therefore, PaddleCV can be considered a complete framework that offers toolkits for eight types of pattern recognition and computer vision problems:

Image classification: Image classification refers to the problem of assigning an image to one of several possible categories. These can be dogs and cats or cars and bicycles, for example. Image classification is one of the best-solved problems in the AI world, and there many well-understood models out there on the market. PaddlePaddle offers a pretty large palette of pre-trained or this task like AlexNet, VGG, GoogLeNet, ResNet, Inception-v4, MobileNet, SE-ResNeXt, ShuffleNet models, which are ready for user download. Don't be scared by the cryptic names. All of these model names are industry-standard and can easily be found in the literature.

Target detection: In image classification always the whole image is taken into account. On the other hand, when doing target detection, the task is to find a target of a given category within an image, assign the correct category, and obtain its coordinates. Some available models here are SSD, PyramidBox, Faster RCNN, MaskRCNN.

Image segmentation: In contrast to target detection, where you are interested in the coordinates of target objects when doing image segmentation, you try to partition the image into sub-groups of pixels representing objects transforming the image into an easier-to-analyze representation. Image segmentation plays an important role in autonomous vehicle driving to segment and understand street scenes to avoid pedestrians and cars getting involved in accidents. It can also play a significant role in improving medical diagnosis by AI-supported image analysis.

Video classification: The goal of video classification is to understand the contextual information of a video. It is not sufficient to understand the particular frames of a video show, but the classifier has to analyze them within a shared context.

Image generation: Image generation is where AI starts getting creative. The goal here is to generate new images out of user input which can be random data or another image. This technique allows to add features to an image or change the faces of persons on pictures, for example.

Metric learning: The goal of metric learning, also called similarity learning, is to learn a similarity function describing how similar or related two objects are. There are many applications for this in recommendation systems, visual identity tracking, face identification verification, and speaker verification.

Keypoint detection: In keypoint detection, the human body is abstracted to a set of key points. By tracking and extrapolating these key points, it is possible to predict the next movements of humans. The technology already plays a crucial role in motion classification, abnormal behavior detection, and autonomous driving.

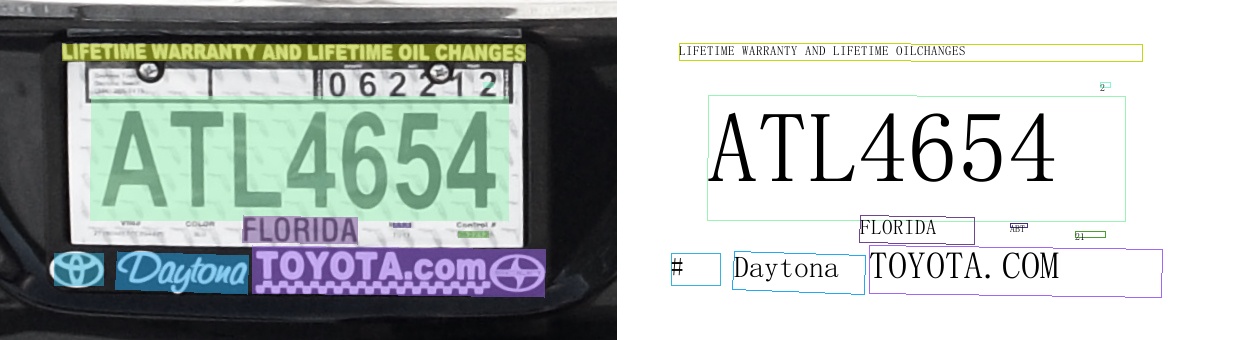

Paddle OCR

A typical application of computer vision is Optical Character Recognition (OCR). PaddleOCR is a universal OCR System that currently supports more than 80 languages and is extensible. In addition, it is incredibly lightweight: The model size of a PP-OCR model is only 3.5M for 6622 Chinese characters. Another dataset for recognizing 63 alphanumeric symbols requires just 2.8M. The system can also complex layouts with distorted fonts like traffic signs.

Comparing this to Tesseract, which is considered the leading open source OCR system currently, this is a significant improvement if you want to use OCR in mobile applications. The Chinese standard train data for Tesseract is 43M, while English still has 23M.

In fact, the OCR system of our PDF to Word Converter is partly based on PaddleOCR so you might have used it already without noticing. Since Paddle OCR is Python-based, it can be easily installed using the PIP package manager.

Speech Recognition and Natural Language Processing

There is a clear difference between speech recognition and natural language procession. The first refers to the challenge of converting acoustic signals into a machine-readable format. A typical example is the problem of speech-to-text conversion. On the other hand, natural language processing (NLP) gets a bit further: Here, the goal is to make the computer understand and react to the user input and react helpfully. For example, if you ask the computer a question, it should ideally give you a meaningful answer in the best of all worlds.

Natural Language Processing: As an allusion to Google's Bert NLP algorithm, the PaddlePaddle community developed their own NLP algorithm called ERNIE. It uses a knowledge-based approach combining pre-trained models with a pool of multi-source knowledge. This knowledge base can simply be an extensive collection of texts, like Wikipedia or Reddit, and other popular forums on the web. The system continuously enhances its results vocabulary, structure, semantics, and other aspects from the data pool. BERT only learns original language signals, but ERNIE enhances the model's semantic representation capabilities.

Baidu compared the performance of ERNIE 2.0 with BERT, and the result was that it outperforms BERT and also XLNet on 16 tasks, including English challenges on GLUE benchmarks. The General Language Understanding Evaluation (GLUE) benchmark is a state-of-the-art collection of resources for training and evaluating language understanding systems. In numbers, ERNIE outperforms BERT for English tasks by 3.1%. However, for Chinese tasks, the lead was less clear. A new application of these technologies is the field of content intelligence, where artificial intelligence classifiers are used to gain insights into the effectiveness and quality of a business’ content.

Recommendation Systems

Life would be pretty boring without all these shiny movie recommendations popping up whenever logging into one's Netflix account, coming up with great ideas what video to watch next. Whoever comes up with these proposals seems to know us quite well since they will fit our interests. Responsible for the magic behind these suggestions are recommendation systems. Usually, they use data of past purchases and networks to predict what you might want to watch next. Technically, there several approaches to implement a recommendation system.

A brand new approach in this field is using recurrent neural networks (RNNs). Most neural networks work in one direction by their definition. You feed them with some data and receive the output. The main innovation of RNNs is that they allow previous outputs to be used as inputs again, which means that the data is processed several times by the network in internal loops.

Therefore RNNs can develop a "memory" which remembers all information about the data processed before. Since the information looping inside the network cannot be accessed from outside, it is often called a "hidden state."

In large RNNs, there can be multiple of these hidden state layers in different regions of the network. Thus, RNNs are probably the first type of AI with short-term memory.

That is why RNNs found their role in session-based or conversation-based recommendation systems. A session-based recommendation system uses the user's short-term historical activity records to predict the content that may be of interest at the next moment and click to view.

However, this model also has some shortcomings. For example, in systems where sessions usually anonymous, the user behavior during a single session is typically limited. Therefore, it isn't easy to generate effective proposals.

PaddlePaddle in Benchmarks

Because PaddlePaddle is not very widespread in the western world, few benchmarking data are available, comparing it directly to US deep learning frameworks. The numbers of the following benchmark comparing PaddlePaddle with Pytorch and Tensor flow originate from a Chinese publication about the framework.

On average, PaddlePaddle outperforms its competitors by about 42% during the training for computer vision tasks. However, concerning NLP, the advantage is only about 9 % during training.

PaddleLite

PaddleLite is a version enhanced for mobile devices. It allows the deployment of trained models on ARM processors and MALI GPUs, which are graphics processing units optimized for smartphones. Also, Adreno GPUs by Qualcomm are supported. Also, Raspberry Pi systems are supported, and PaddleLite is cross-compatible with Apple and Android systems.

Getting started

For starting to dive into this new framework, the PaddlePaddle GitHub repository is a great starting point. For each sub-package, there is a section also containing tutorials in English and Chinese. In most cases, the Chinese version is more detailed, but it should not be too hard to access this information in times of AI and with the help of automated translation at hand. If you are interested in PaddleOCR it is also worth having a look at our PaddleOCR Engine Example, which guides you through the setup process for a working OCR engine.